IO in Golang

1. os.Open() loads entire file into memory?#

No, the os.Open() function in Golang does not load the entire file into RAM by default. It returns a file descriptor that allows you to read or write data from or to the file.

I found this answer talks about the open() sys call Linux on StackOverflow, which may give you hints on how os.Open() works:

No, a file is not automatically read into memory by opening it. That would be awfully inefficient. You have to read it yourself.

No it doesn’t. It just gives file a descriptor called file descriptor which then you can use to do read / write and some other operations. Think file descriptor as an abstraction or an handle over what lays on disk.

The open() function shall establish the connection between a file and a file descriptor.

func Open(name string) (*File, error)

Open opens the named file for reading. If successful, methods on the returned file can be used for reading;

The return type of os.Open() is *File, which is a pointer to a struct:

// File represents an open file descriptor.

type File struct {

*file // os specific

}

type file struct {

pfd poll.FD

name string

dirinfo *dirInfo

nonblock bool

stdoutOrErr bool

appendMode bool

}

As you can see, os.Open() just returns a file descriptor, which is a pointer to a struct. It does not load the entire file into memory. After you get the file descriptor, you can use it to read or write data from or to the file. This is why we say “The open() function shall establish the connection between a file and a file descriptor.”

If you want to read the entire file into memory, you can use the ioutil.ReadFile() function from the io/ioutil package. This function reads the entire contents of a file into a byte slice. However, keep in mind that this approach may not be suitable for very large files, as it loads the entire file into memory at once.

Let me demonstrate how to open a file and read its contents chunk by chunk in Golang:

func main() {

// Open the file for reading

file, _ := os.Open("input.txt")

// Close the file later, otherwise it will cause memory leak

defer file.Close()

// Create a buffer to keep chunks that are read

buffer := make([]byte, 1024)

// Read from the file

for {

// Read a chunk

// There is another way to read: file.ReadAt()

n, _ := file.Read(buffer)

if n == 0 {

break

}

fmt.Print(string(buffer[:n]))

}

}

Why if os.File() load the entire file into memory matters? Because if you are dealing with a very large file, and the RAM is quite limited, your program may get killed by the OS due to out of memory.

2. io.Reader interface#

2.1. What is io.Reader#

The use io.Reader is quite common in Go. What is io.Reader?

type Reader interface {

Read(p []byte) (n int, err error)

}

As you can see, io.Reader is an interface that has a Read() method. Any type that implements the Read() method is a io.Reader. Read reads up to len(p) bytes into p. It returns the number of bytes read (0 <= n <= len(p)) and any error encountered. Learn more: https://golang.org/pkg/io/#Reader

2.2. Common types implement io.Reader#

2.2.1. *os.File#

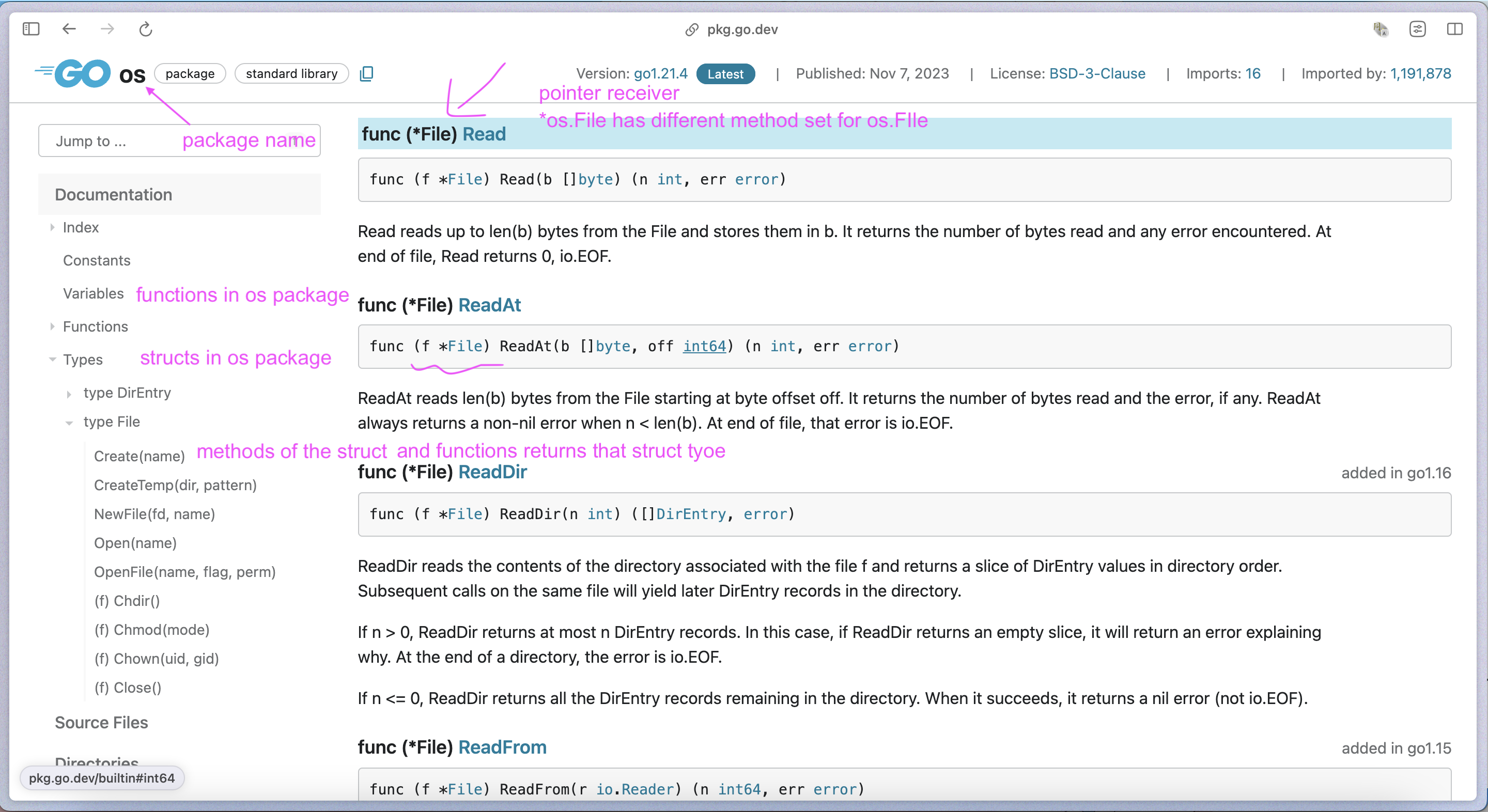

*os.File we mentioned above has the Read() method, which means it’s an io.Reader.

Note that *os.File doesn’t equals to os.File, because they have different method set. Learn more: https://davidzhu.xyz/post/golang/basics/006-interfaces/

func (f *File) Read(b []byte) (n int, err error)

2.2.2. *bytes.Buffer#

// A Buffer is a variable-sized buffer of bytes with Read and Write methods. The zero value for Buffer is an empty buffer ready to use.

type Buffer struct {

// contains filtered or unexported fields

}

func (b *Buffer) Read(p []byte) (n int, err error)

As you can see, *bytes.Buffer has the Read() method, not bytes.Buffer. So *bytes.Buffer is an io.Reader, but bytes.Buffer is not.

If you run code below, you will get an error Cannot use ‘bytes.Buffer{}’ (type bytes.Buffer) as the type io.Reader Type does not implement ‘io.Reader’ as the ‘Read’ method has a pointer receiver:

var r io.Reader

r = bytes.Buffer{} // error

r = &bytes.Buffer{} // this is ok

You should learn how to read the documentation provided Go, it’s very important:

2.2.3. net.Conn#

The net.Conn interface represents a network connection, such as a TCP or UDP connection. Many networking packages in Go, like net, net/http, and net/rpc, provide implementations of this interface. net.Conn interface has the Read() method, so it’s an io.Reader.

type Conn interface {

Read(b []byte) (n int, err error)

Write(b []byte) (n int, err error)

...

}

2.2.4. Code example#

file, _ := os.Open("example.txt")

defer file.Close()

buffer := make([]byte, 1024)

n, _ := file.Read(buffer)

fmt.Printf("Read %d bytes from the file: %s\n", n, buffer[:n])

data := []byte("Hello, World!")

buffer := bytes.NewBuffer(data)

readData := make([]byte, 5)

n, _ := buffer.Read(readData)

fmt.Printf("Read %d bytes from the buffer: %s\n", n, readData)

conn, _ := net.Dial("tcp", "example.com:80")

defer conn.Close()

buffer := make([]byte, 1024)

n, _ := conn.Read(buffer)

fmt.Printf("Read %d bytes from the network connection: %s\n", n, buffer[:n])

*os.File, *bytes.Buffer, and net.Conn all have the Read() method, so they are all io.Reader. And the use of them are quite similar which involves:

- get a

io.Reader - close the

io.Readerlater - create a buffer for store chunk of data (not load entire data into memory)

- read data (chunk by chunk) to the buffer

3. Reader wrapper#

3.1. Read user input from the console#

Sometimes we want to add some extra functionality to a io.Reader, for example, when read user input from the console, we want use a specific delimiter to indicate the end of input. We can use a bufio.Reader to wrap the os.Stdin:

reader := bufio.NewReader(os.Stdin)

fmt.Print("Enter your input: ")

input, _ := reader.ReadString('\n')

fmt.Println("You entered:", input)

// In bufio package, so the pointer receiver *Reader here is *bufio.Reader not io.Reader.

func NewReader(rd io.Reader) *Reader

// In bufio package, same as above.

func (b *Reader) ReadString(delim byte) (string, error)

*bufio.Reader and os.Stdin both have the Read() method, so they are both io.Reader. But bufio.Reader provides more functionality than os.Stdin, for example, ReadString(), ReadBytes(), ReadLine(), etc. So we can use bufio.Reader to wrap os.Stdin to add extra functionality.

If you use os.Stdin to read user input, would be a little inconvenient, for example, you need convert the byte slice to a string, and trim the unused part. And you cannot specify a delimiter to indicate the end of input:

fmt.Print("Enter your input: ")

input := make([]byte, 1024) // Create a byte slice of a certain size

n, _ := os.Stdin.Read(input)

// Convert byte slice to a string, trimming the unused part

inputStr := string(input[:n])

fmt.Println("You entered:", inputStr)

3.2. Limit the size of HTTP request body#

When handle the user upload file, you may handle like this:

func uploadHandler(w http.ResponseWriter, r *http.Request) {

// Parse the form data from the request

_ := r.ParseMultipartForm(10 << 20) // Set a reasonable file size limit, e.g., 10MB

// Get the file from the request

file, _, _ := r.FormFile("file")

...

}

Accroding to the documentation of r.ParseMultipartForm():

ParseMultipartForm parses a request body as multipart/form-data. The whole request body is parsed and up to a total of maxMemory bytes of its file parts are stored in memory, with the remainder stored on disk in temporary files. http package - net/http - Go Packages

This means r.ParseMultipartForm() will parse the whole request body even if the uploaded file is larger than maxMemory. But think about another senario, if the client sends a vary large file, the server needs to handle all that data, which may consume the bandwith and CUP usage of the server.

So we need to limit the size of incoming request bodies. We can use http.MaxBytesReader() to wrap the http.Request.Body like this:

func (s *server) handleUpload(w http.ResponseWriter, r *http.Request, currentDir string) (error, int) {

r.Body = http.MaxBytesReader(w, r.Body, s.maxFileSize)

if err := r.ParseMultipartForm(maxFileSize); err != nil {

return fmt.Errorf("file is too large:%v", err), http.StatusBadRequest

}

// obtain file from parsed form.

parsedFile, _, _ := r.FormFile("file")

...

}

Let’s see the documentation of http.MaxBytesReader():

func MaxBytesReader(w ResponseWriter, r io.ReadCloser, n int64) io.ReadCloser

http.MaxBytesReader() accepts a io.ReadCloser and returns a io.ReadCloser. To be specific, http.MaxBytesReader() wraps the http.Request.Body(io.ReadCloser) to a http.maxBytesReader which implements the io.ReadCloser interface.

func MaxBytesReader(w ResponseWriter, r io.ReadCloser, n int64) io.ReadCloser {

if n < 0 { // Treat negative limits as equivalent to 0.

n = 0

}

return &maxBytesReader{w: w, r: r, i: n, n: n}

}

As you can see, what http.MaxBytesReader() does is just to wrap the http.Request.Body to another io.ReadCloser.

Then you call r.ParseMultipartForm(), this is where the actual parsing and loading of data into memory occur. According to the documentation of r.ParseMultipartForm(), it will parse the whole request body. But the wrapped http.Request.Body will limit the size of the request body, if the incoming data exceeds maxFileSize during the reading and parsing process, the http.MaxBytesReader will trigger an error, effectively preventing the server from reading any more data from the client. This helps to protect against clients sending excessively large requests.

Then what do you think about how the r.ParseMultipartForm() parse file from request body? Don’t forget http.Request.Body is a io.Reader too, which means it has the Read() method.

It actually calls the Read() method of http.Request.Body to read data from the request body.

In the Read() method of http.maxBytesReader, it will check if the number of bytes read exceeds the limit, if so, it will return an error. You can check the source code of the Read() method of http.maxBytesReader:

func (l *maxBytesReader) Read(p []byte) (n int, err error) {

...

if res, ok := l.w.(requestTooLarger); ok {

res.requestTooLarge()

}

l.err = &MaxBytesError{l.i}

return n, l.err

}

MaxBytesReader prevents clients from accidentally or maliciously sending a large request and wasting server resources. If possible, it tells the ResponseWriter to close the connection after the limit has been reached.

4. The nature of io.Reader#

Readers in Go are used for reading data streams, and they have a crucial property: once you read data from a reader, that data is consumed. It’s not possible to ‘rewind’ or read the same data from the reader again unless the reader specifically supports such an operation.

As what we did above, we read data from *os.File, *bytes.Buffer, and net.Conn which are all io.Reader. When we read the data into the buffer chunk by chunk, we didn’t record or mark where we stop, but in next time we read from the reader, it will start from the last stop point. This is because the data is consumed. Let me demonstrate this:

func main() {

reader := strings.NewReader("HelloWorld")

buf := make([]byte, 5)

_, _ = reader.Read(buf)

fmt.Println(string(buf)) // Outputs: Hello

_, _ = reader.Read(buf)

fmt.Println(string(buf)) // Outputs: World

}

This applies to all readers, including *os.File, *bytes.Buffer, and net.Conn, etc.